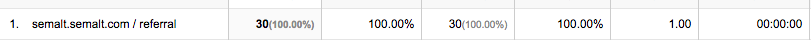

If you are like most websites on the internet you were hit with a spam crawler called Semalt. There are quiet a few people in Google’s product forum asking how to easily remove the Semalt spam crawler. Chances are you found this post by looking for a way to easily remove the Semalt spam from your Google Analytics. If you see the Semalt.Semalt result in your GA referral traffic read on to learn how to easily remove the annoyance.

This post will help you remove Semalt traffic from Googly analytics data. Unfortunately this fix does not work recursively and it will only work from this point on. A quick word on the crawler and how it affects your data. Google is not the only crawler on the web. In fact, there are numerous sites that utilize crawlers for various purposes. However, this company from Russia, who I won’t link to is using it to send traffic to a lot of websites. This doesn’t hurt your analytics per-say but it does give you skewed data. You can tell this is bot generated activity becuase it will affect the numbers used to generate page views, bounce rate, and time on page, so best we remove it.

Why remove it?

I have heard some users opting into Semalt’s service to remove the crawler from their site, which I would not recommend. Some have tried setting up filters (myself included) and found the crawler to get around it with using different host name settings. In addition, a small portion of websites are using the boost in page views and traffic to skew their data and claim their overall site traffic is increasing. The spam traffic affects page views, bounce rates, referral information and many other categories. Inferring business intelligence from data is hard enough, why complicate things. Lets remove it, shall we?

Here is how to easily remove the Semalt spam crawler

You will need access to your web server and be able to edit files and then place them back on the server. If you don’t have access to your website’s files you will need to contact your webmaster. They should be able to either do this for you or give you an FTP account to remove and. Once you have access to your web server files look for one called .htaccess. This file allows for rules to be created that tells the server what traffic is and is not allowed. We are going to set a rule to block Semalt traffic. Copy this into your .htaccess file, save it, and place the new copy back on your server. If you are using FileZilla don’t foget to allow the new file to “replace” the older file. From here on out you should notice a reduction in traffic from Semalt.com

# block visitors referred from semalt.com

RewriteEngine On

RewriteCond %{HTTP_REFERER} ^http://.*semalt.com [NC]

RewriteRule .* - [F]

You should always analyze what code means before you go copy/pasting it from the internet and applying it to your website. So let me explain what each of these lines mean and you can decide if this makes sense for your website.

# block visitors referred from semalt.com

The first line is a comment indicated by the # sign. Anything on the same line as a # is not read by the server but is a human readable note for remembering what the code does.

RewriteEngine On

If you fully want to understand what a Rewrite Engine is there is not better place to look then Apache documentation as it is the source for most web servers. In laymen terms, a Rewrite Engine helps make cleaner looking URL’s and uses relative file paths in the server to link static/dynamic content on pages. This command is turning that functionality on.

RewriteCond %{HTTP_REFERER} ^http://.*semalt.com [NC]

This command is best explained by the experts. Basically this is setting the condition or the rule for removing the Semalt traffic to your pages. The [NC] at the end is what is called a flag and means the rule is NOT case sensitive. Which is important because the Semalt crawler tries to reinvent itself to get around filter rules. See how it uses a subdomain to duplicate it’s name?

The

RewriteConddirective defines a rule condition. One or moreRewriteCondcan precede aRewriteRuledirective. The following rule is then only used if both the current state of the URI matches its pattern, and if these conditions are met. –Apache Documentation

RewriteRule .* - [F]

The last command is using the F flag meaning forbidden or a 403 error will be returned to the client

Using the [F] flag causes the server to return a 403 Forbidden status code to the client. While the same behavior can be accomplished using the

Denydirective, this allows more flexibility in assigning a Forbidden status.

I hope this helps with your understanding of how the rewrite condition will remove Semalt spam crawler from your sites.

Join The Newsletter

Get occasional emails from me when I publish new projects and articles.